|

| Our novel model, designed with multi-task point-cloud and segmentation network enables bridging the gap between two differet imaging domains at significantly lower runtimes than existing methods, while maintaining high segmentation accuracy. |

|

|

|

|

|

|

|

|

|

|

| Our novel model, designed with multi-task point-cloud and segmentation network enables bridging the gap between two differet imaging domains at significantly lower runtimes than existing methods, while maintaining high segmentation accuracy. |

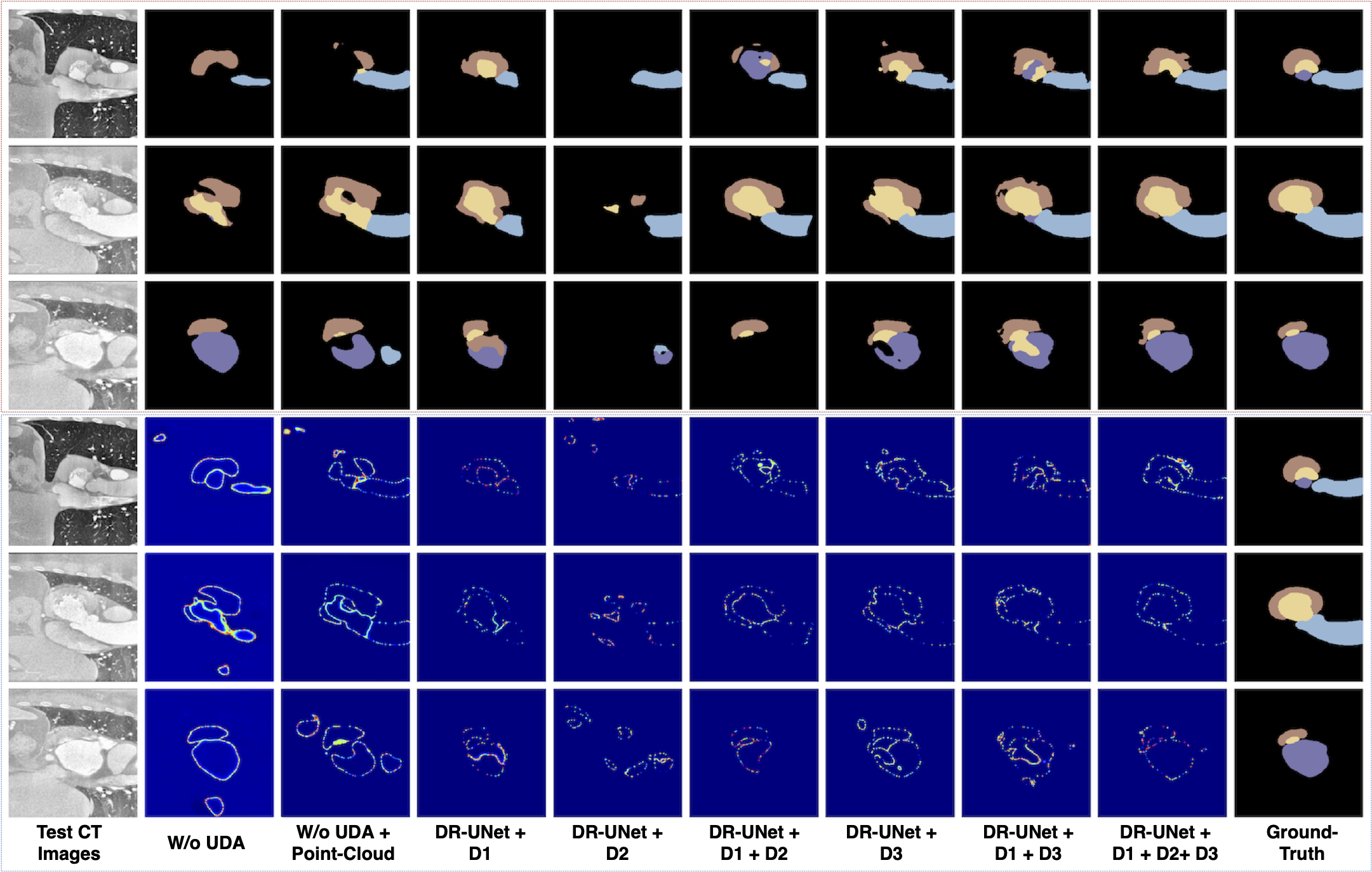

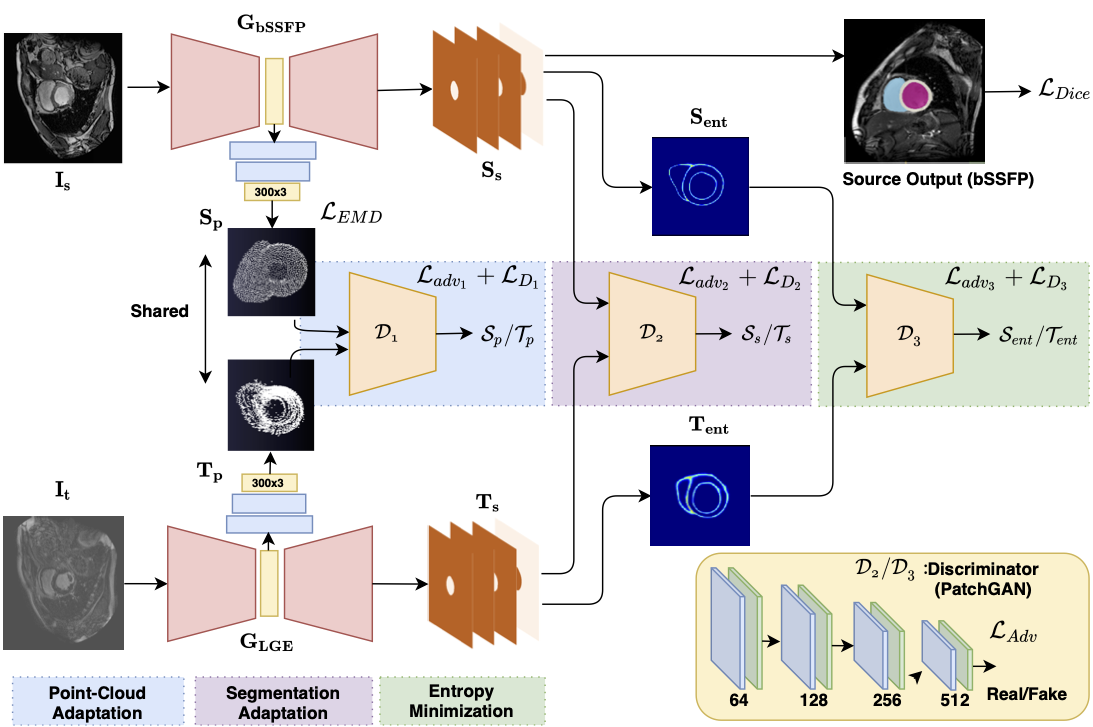

| Deep learning models are sensitive to domain shift phenomena. A model trained on images from one domain cannot generalise well when tested on images from a different domain, despite capturing similar anatomical structures. It is mainly because the data distribution between the two domains is different. Moreover, creating annotation for every new modality is a tedious and time-consuming task, which also suffers from high inter- and intra- observer variability. Unsupervised domain adaptation (UDA) methods intend to reduce the gap between source and target domains by leveraging source domain labelled data to generate labels for the target domain. However, current state-of-the-art (SOTA) UDA methods demonstrate degraded performance when there is insufficient data in source and target domains. In this paper, we present a novel UDA method for multi-modal cardiac image segmentation. The proposed method is based on adversarial learning and adapts network features between source and target domain in different spaces. The paper introduces an end-to-end framework that integrates: a) entropy minimisation, b) output feature space alignment and c) a novel point-cloud shape adaptation based on latent features learned by the segmentation model. We validated our method on two cardiac datasets by adapting from the annotated source domain, bSSFP-MRI (balanced Steady-State Free Procession-MRI), to the unannotated target domain, LGE-MRI (Late-gadolinium enhance-MRI), for the multi-sequence dataset; and from MRI (source) to CT (target) for the cross-modality dataset. The results highlighted that by enforcing adversarial learning in different parts of the network, the proposed method delivered promising performance, compared to other SOTA methods. |

|

|

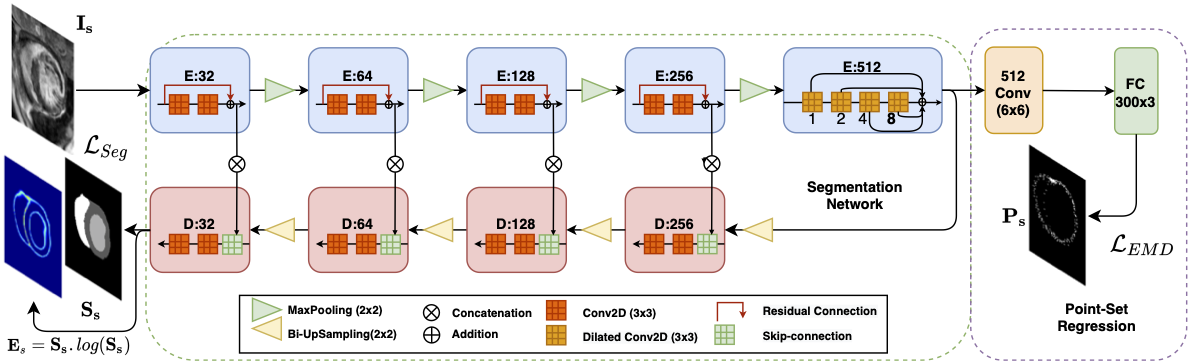

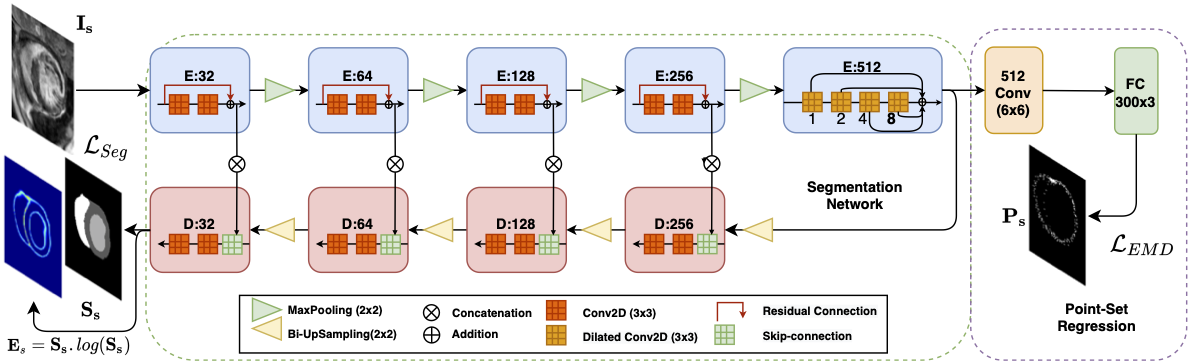

| A single image from the source domain or the target domain fed into its corresponding domain-specific DR-UNet segmentation network, which has shared weights. The DR-UNet encoder extract high-level features for both the source and target domains. Then the features are sent to the decoder for segmentation and point-net to generate point-cloud. The point-cloud is fed to D_3 for shape alignment. The output probability of DR-UNet for the source domain is trained in a supervised manner. Then, we send the \textit{softmax} output simultaneously to output-space alignment and entropy minimisation discriminators. The domain classifier networks, D_1 and D_2, then differentiates whether its input is from source domain or target domain. |

|

S. Vesal, M. Gu, R. Kosti, A. Maier, N. Ravikumar Unsupervised Adaptation of Point-Clouds and Entropy Minimisation for Multi-modal Cardiac-MR Segmentation (ArXiv) |

|

Chen, Cheng and Dou, Qi and Chen, Hao and Qin, Jing and Heng, Pheng-Ann, Synergistic Image and Feature Adaptation: Towards Cross-Modality Domain Adaptation for Medical Image Segmentation, AAAI 2019 Vu, Tuan-Hung and Jain, Himalaya and Bucher, Maxime and Cord, Mathieu and Prez, Patrick, ADVENT: Adversarial Entropy Minimization for Domain Adaptation in Semantic Segmentation, CVPR 2019 |

|

|